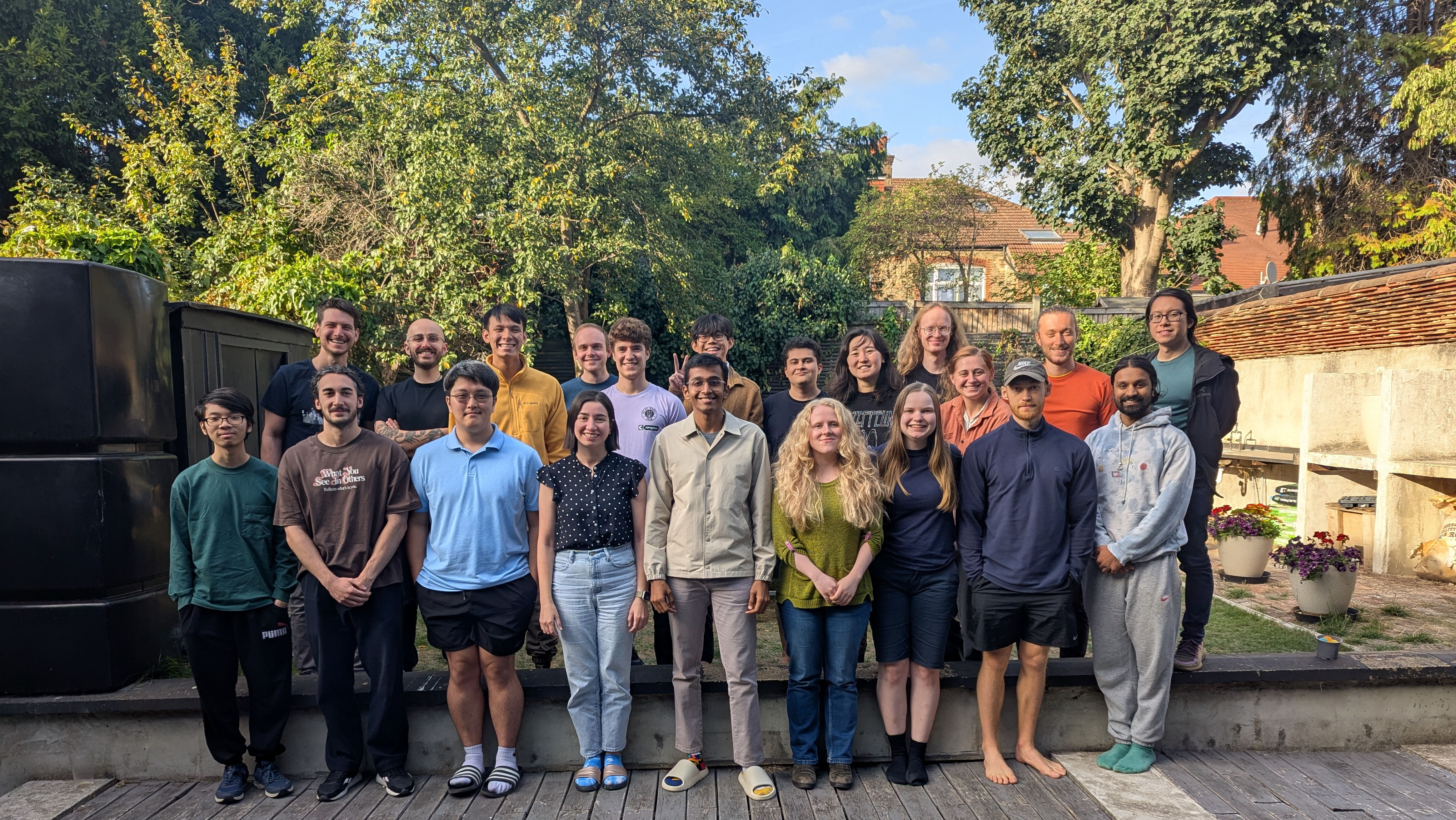

The inaugural AI Security Bootcamp brought together 20 researchers and engineers for four intensive weeks of training in security fundamentals, AI infrastructure security, and AI-specific threats. The program culminated in a week of capstone projects where participants explored cutting-edge security research.

The curriculum is available on GitHub for self-study.

Curriculum

Week 1: Security Fundamentals

- Fundamentals of Cryptography: Stream ciphers (LCG), block ciphers (DES, SPN), hashing (MD5), message authentication (HMAC), public-key cryptography (RSA), cryptanalysis (crib dragging, meet-in-the-middle, padding oracle attacks)

- Network Security: Traffic analysis with Wireshark, HTTP/HTTPS man-in-the-middle interception, certificate pinning, NFQUEUE, covert channels (DNS, ICMP)

- Threat Modeling: STRIDE methodology, attack trees, adversary capability modeling

- Penetration Testing: Network reconnaissance, enumeration, password cracking, Metasploit exploitation, privilege escalation, persistence

Week 2: Infrastructure Security

- Containerization: Container fundamentals (chroot, cgroups, namespaces), network isolation, container escapes, syscall monitoring

- Supply Chain Security: Pickle deserialization attacks, dependency confusion, model provenance

- Reverse Engineering: Ghidra, buffer overflow, crafting shellcode exploits, bypassing stack canaries

- Application & Cloud Security: XSS, CSRF, SSRF, SQL injection, command injection (OWASP Top 10), cloud Identity and Access Management

Week 3: AI-Specific Security

- Adversarial ML: Crafting adversarial examples, attacks on image classifier, watermarking, trojans

- LLM Security: Tokenization, prompt injection, model weight extraction attacks, model editing, abliteration

- GPU & Datacenter Security: Nvidia container toolkit exploits, GPU isolation, confidential computing

- AI Application Security: MCP (Model Context Protocol) security, RAG injection, hardware supply chain

Week 4: Capstone Project

- Implement novel security solutions, replicate sophisticated attacks, or conduct security research

- Work with expert mentors on cutting-edge AI security challenges

- Present findings to cohort and industry professionals

Who was this for?

AISB was designed for researchers and engineers who care about securing the development of AI systems. Ideal participants had prior experience with deep learning (training/evals) and were comfortable with Python.

The program ran full-time, in-person in London. All expenses including flights, accommodation, and meals were covered.

Capstone Projects

MCP Protector Proxy

AndrewA security layer for the Model Context Protocol (MCP) that provides tool inspection, permission controls, sanitization against prompt injection, and rate limiting.

TAR Poison

ChrisData poisoning attacks on Support Vector Machines used in legal document discovery for Technology Assisted Review.

Chasseur

Diana & LeoCatching misbehaving coding agents red-handed through local deployment of EDR to detect and mitigate suspicious command execution attempts.

Breaking Taylor Unswift

EthanDemonstrates weight extraction from Taylor series-obfuscated neural networks, undermining model exfiltration protections.

PicoCTF Evals

IrakliImplementation of capture-the-flag evaluations using the inspect evals framework for AI security testing.

Autonomy caps for Agents governance

JaehoPolicy framework proposing autonomy caps for AI agents in government systems with practical usage guidelines.

ML Supply Chain Attacks

JakubProof-of-concept pickle file payload injection and MD5 hash collision attacks on ML model distribution.

Red-teaming Activation Monitors

JordAdversarial attacks on AI monitoring systems via spurious correlations and backdoor trigger coordination.

Watermark Stealing Replication

JuanReplication of watermark stealing attacks on machine learning models with interactive Streamlit demo.

ML Supply Chain Vulnerabilities

KatiePaper replication exploring attack scenarios in outsourced training and transfer learning.

Immune

LeoMalware detection using static binary analysis features to identify malicious software.

Adversarial Attack Detector

LorenzoUsing linear probes to detect and analyze adversarial attacks on neural networks.

Audio Attacks on Whisper

RhitaAdversarial attacks on OpenAI's Whisper automatic speech recognition model.

Here Comes the Worm

SamAI security research exploring worm-like behavior in AI systems.

Team

Pranav Gade

Research engineer at Conjecture. Created AISB to bridge AI safety and security, and leads curriculum design and program direction.

Jan Michelfeit

Security lead at Conjecture. Designs AISB's hands-on labs and capstone projects, drawing on 10+ years securing complex systems and ML infrastructure.

Nitzan Shulman

Head of Cyber Security at Heron AI Security Initiative. 6+ years doing security research specializing in IOT, Robotics, Malware and AI security.

Jinglin Li

Software engineer and educator. Keeps AISB running smoothly.

Acknowledgments

This program was supported by Open Philanthropy